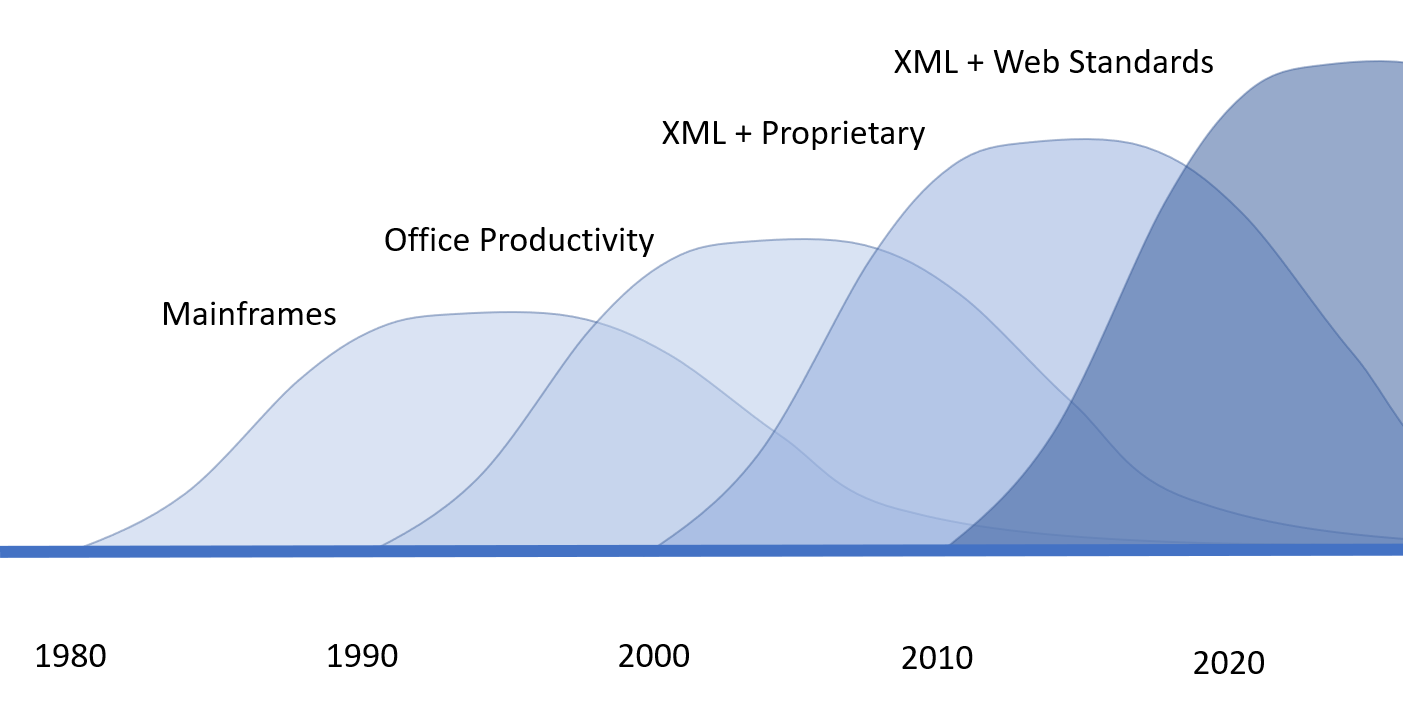

In my last blog, I wrote about how technology waves define distinct software generations and how choosing to ride the waves determines the risks – both in terms of development risk and time to obsolescence. In this blog, I’m going to explore the four generations of technology I’ve seen in the legislative information technology market.

The Mainframe Wave

The first era I’m familiar with came about in the 1980’s with the advent of markup languages and text-oriented databases such as TextDBMS in the US. These solutions were all mainframe solutions, coming late in the mainframe era of computing. These systems were quite simple – being focused on formatting oriented markup intended for one primary result – a paper document. In this era, the effort to use a computer system was still quite substantial and typically the drafting attorney worked with technical data entry staff to enter the information into the computer. This was the mainframe technology generation. Today it is entirely obsolete and there are few implementations left in the wild.

The Office Productivity Wave

In the 1990’s things rapidly changed. Personal computing matured and office productivity tools became far more integrated and programmable. This led to a new wave of technology being adopted around the office productivity word processing tools such as WordPerfect, Microsoft Word, and to a smaller extent Open Office, which came along late in the cycle. A big change was that the drafting attorneys became the ones entering the data – no longer was it necessary to have a dedicated technical staff to do data entry. The focus was still much on documents intended for printing, but supporting automation started coming into the picture. Information storage was quite simple, usually either plain file shares or content management systems which managed documents as files.

There was a problem, however. Many organizations quickly found they had “thousands of macros”. And with those thousands of macros, maintenance became a serious issue. Office productivity tools serve a very broad and competitive market. For the makers of productivity tools, ensuring that their offering remain evergreen has always meant constantly refreshing the user interface and data formats. Supporting large customizations of prior versions of software has been of secondary or even tertiary concern. The result of legislative IT systems built in this era has been that they have often become stuck on one version of the software or another with no clear way forward. Often, that version is very old – 10 to 15 years old not being uncommon.

The XML Wave

In the early 2000s, a new wave started to form – pushed by the emergence of XML, the development of the web, and changing expectations of the role of computing in everyone’s lives. Rather than being a tool simply for producing documents, computers quickly had became tools for the production and consumption of information. The shift from document-centric to information-centric computing started to be made possible by a number of new developments, especially around XML. Increasingly, the document became viewed as something to be partitioned into chunks of information that could be processed with more sophisticated automation than was possible with office productivity. This led to the need for more sophisticated database or repository technologies than were possible with content management systems or file shares.

There were a few problems with this era however. While the vision and some foundational technologies were defined, much work remained to be done. Many of the technologies that were pressed into service were merely adaptations of a previous era that barely was – the SGML era. The result was a lot of tools that were pretenders (or placeholders) for tools that were still to come. (I briefly worked for a database company that was one of these pretenders – and the more I knew about XML the more I realized our product deserved no place in the marketplace).

With many standards still in development and only crude tools available, making all the pieces work well together was quite a challenge – the result often being failure and reverting to tried-and-true approaches from the earlier office productivity era. Technologies like WebDAV and SOAP (anyone remember SOAP?) provided ways to integrate disparate tools together, but the effort was still substantial.

Microsoft’s domination of the browser market along with their disinterest in standards they hadn’t created meant that web technology stalled for a while and web-based solutions played a lesser role than they otherwise might have. The solutions that were built were a bit of a hodgepodge of pieces that were forced to work together.

The Standards Wave

It was the breaking of Microsoft’s grip on the browser market that led to a sea change of web technologies starting in the early 2010s. Of the technologies we used to build XML systems in the early 2000s, virtually nothing is the same today – and that’s a very good thing. From proprietary database technology that predated standards, overly complicated integration technologies like SOAP, and proprietary technology stacks like COM/ActiveX (and to some extent .Net and Java too), everything has given way to more standardized alternatives.

Perhaps it is the failure of the big traditional players to exert their control that has been the biggest change. Technologies where the big guys have tried to force their will have been shunned. Real standards, developed by a good cross section of the industry, have thrived while proprietary technologies have withered.

XML’s role has changed too. In the prior generation, XML had a bit of an outsized role – both as a means for modeling documents and as a means for transferring data. With time however, XML has lost some of it’s shiny newness and JSON has taken on much of XML’s role as a means for data transfer. (And no, JSON is not a good idea for modeling a document – it’s a terrible one.) Today XML makes sense doing that which it does best — modelling complex documents.

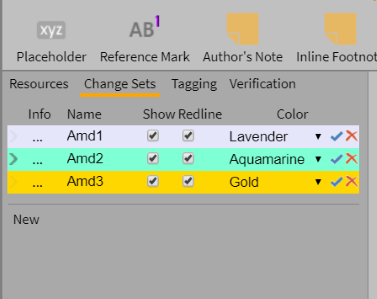

Xcential has been working hard on being ready for the standards-based wave of legislative information systems. We have aggressively chosen to adopt emerging technologies and ditch the boat anchors of the past. LegisPro, our platform for legislative systems, has been designed to be future-proof to the greatest extent possible – even when future-proof means it’s not backward compatible with obsolete or non-standards compliant legacy tools. We build on top of non-proprietary database technology using XQuery and our information model is now an international standard — Akoma Ntoso which we worked hard to standardize through our OASIS participation.

By my estimation, the standards wave began slowly around 2010 and will soon mature. It’s now at that sweet spot where the technologies are settling out and it’s time to jump on the wave for the optimal ride. That’s why we have products ready to go. But it also means we should start to look for the next wave – and I think I see it. In my next blog, I will describe what I think comes next.